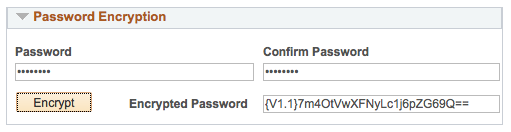

pscipher, psvault and Web Server Passwords

Encrypting passwords is a common activity for PeopleSoft administrators. For many of us, we take for granted that passwords are encrypted by the system. In… Read More »pscipher, psvault and Web Server Passwords